OpenAI, the startup behind DALL-E, has released its new system called Point·E. It combines several models to generate low-res 3D objects in a matter of minutes.

Point·E was open-sourced on December 20, with its source code being uploaded to GitHub. OpenAI also released a paper detailing the system, methods used for its training, as well as its key features and limitations.

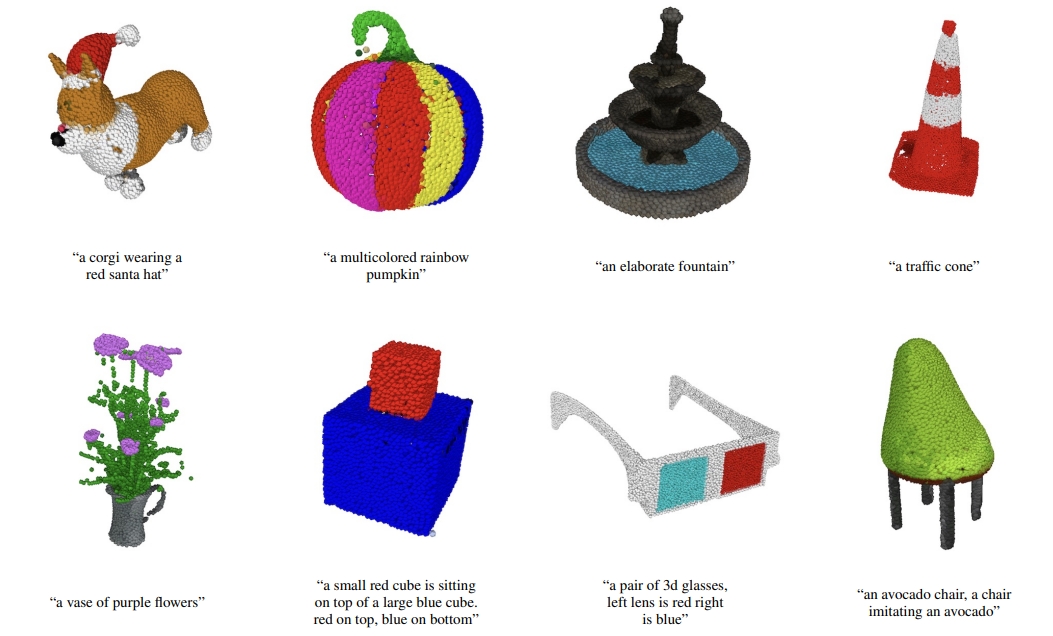

Like Google’s DreamFusion or Nvidia’s Magic3D, Point·E converts text into 3D objects but does it much faster. However, this system is not capable of generating really high-quality models.

How does Point·E work?

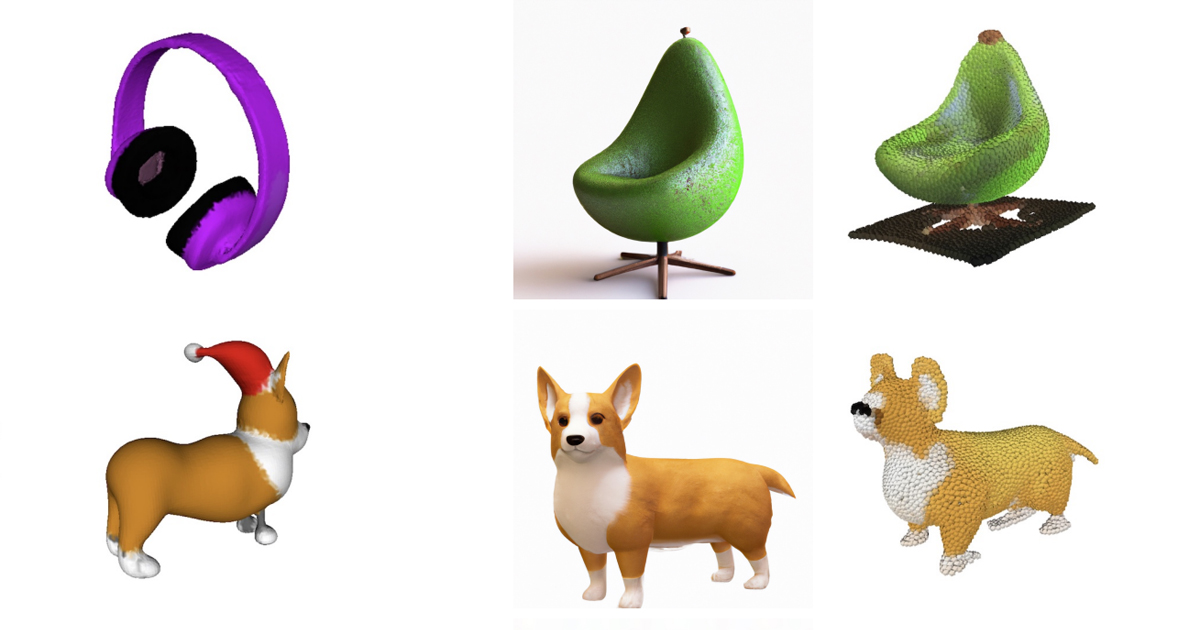

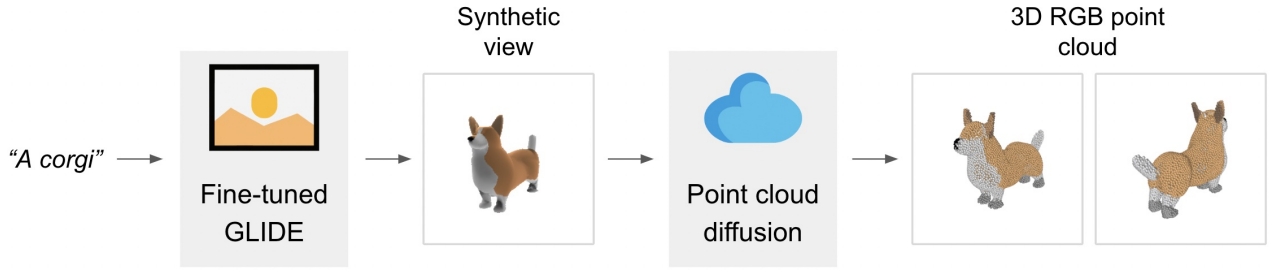

The system generates RGB point clouds — discrete sets of data points that represent a 3D shape or an object — from a text prompt.

Here is how this process looks like in more detail: Point·E uses a text prompt to synthesize a rendered object (via the text-to-image model) and then feeds it to the image-to-3D model to produce a point cloud shape.

Point·E doesn’t render point clouds directly. But it can convert them into meshes by using a “regression-based model to predict the signed distance field of an object given its point cloud” and then assigning colors to each vertex of the mesh.

Due to outliers and other noises that produced point clouds typically have, the meshes often lack important details of the original shape. So this issue is yet to be resolved.

OpenAI also acknowledges that its model has several other limitations, including the fact that Point·E only produces 3D shapes “at a relatively low resolution in point clouds that does not capture fine-grained shape or texture.”

“While our method still falls short of the state-of-the-art in terms of sample quality, it is one to two orders of magnitude faster to sample from, offering a practical trade-off for some use cases,” the paper reads.

According to OpenAI, Point·E can be used to create real-world products (e.g. through 3D printing). The company also hopes that this system will serve as a “starting point for further work in the field of text-to-3D synthesis.”