Last week, online chat platform Discord announced the acquisition of Sentropy, a company behind AI-powered software to detect and remove online harassment.

Discord has traditionally relied on in-house human moderators assisted by volunteers. The deal will allow Discord to enhance its Trust and Safety team with Sentropy’s AI system designed to tackle online harassment and abuse.

We have reached out to Sharon Fisher, a veteran in the games moderation space, to comment on the deal. Fisher has been recently appointed Senior Market Development Executive at Finland-based Utopia Analytics.

Sharon Fisher

Here is where she thinks Discord is with its Sentropy acquisition within the larger moderation market:

“Behind this acquisition by Discord are two big changes to the wider games industry. Firstly, there has been a surge in the number of people playing games throughout the pandemic, with a corresponding increase in the volume of text chat. This surge has left many moderation strategies ineffective and unable to handle the increase of chat volumes, including toxic behaviour and unsafe content – meaning there’s a higher risk of inappropriate content making its way into the platform or game community.

Secondly, there is growing political and media pressure on social media and online gaming platforms to address the problem of toxic online behaviours that is forcing companies to change the way they moderate their platforms — and of course, there is pressure from community users themselves, demanding change.

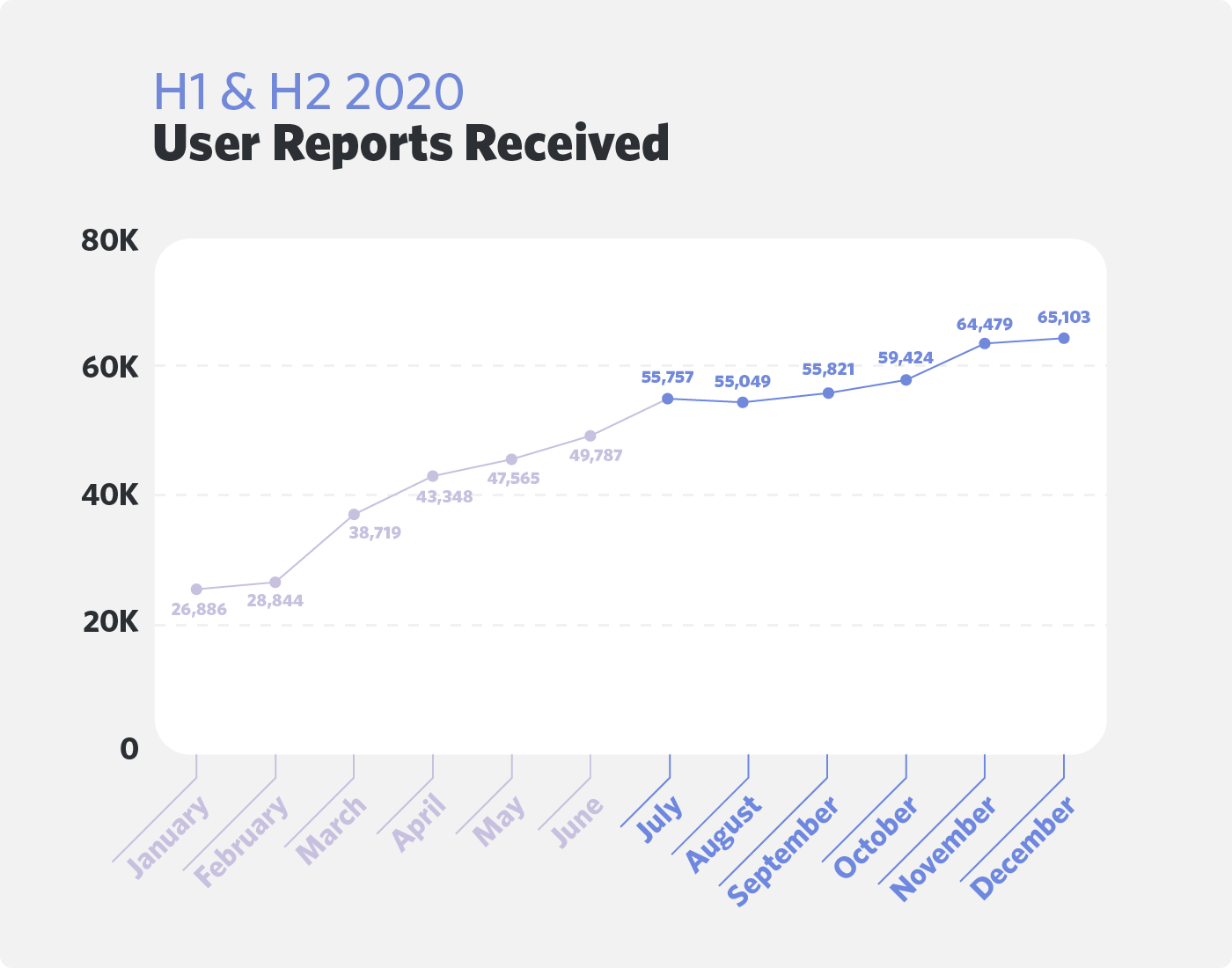

Discord’s own data shows the scale of the moderation problem.

Image Credit: Discord

By the end of 2020 there had been a 225% increase in the volume of content being flagged by the community. Even then, there are likely many more instances of offensive or insulting chat that are never reported, so it’s easy to see why Discord needed a better solution.

Issues around moderation, of course, aren’t limited to Discord. But I think it’s interesting that they have acquired Sentropy rather than try to solve this internally. Sentropy uses AI to enhance the performance of its human-based moderation, so perhaps this is an inadvertent admission that basic filtering and using human moderators just can’t address the scale of the challenge we are seeing. While this is a positive step, Sentropy’s approach is still human-led and can still suffer many of the common drawbacks associated with manual moderation. In contrast, AI-centred moderation avoids the innate bias of human moderators and the inefficiencies that come with moderating at scale, which is why it’s increasingly becoming the only feasible approach.”

Throughout her career, Fisher has worked with major gaming brands, including Supercell, Roblox and Nintendo. At Utopia Analytics, she is working with games companies and brands globally looking to develop their moderation strategies.